When to Use AWS Lambda Functions VS Amazon ECS

To Lambda or Not to Lambda. That is the Question!

Serverless architecture is the new craze in cloud world, with a myriad of serverless services across the various cloud platforms. The concept of not having to patch servers or manage systems security is incredibly appealing and the deployment process is fairly seamless. AWS Lambda is Amazon‘s serverless functions service.

Despite the clear benefits and appeal, there are some use cases where Lambda might not be the best solution to the technical problems at hand.

The purpose of this article is to highlight some use cases where a Lambda can be technically and financially feasible, and when there might be a better solution.

Here’s what I’d like to cover:

- Introduction to AWS Lambda

- Languages Supported by Lambda

- General Lambda Tips for Success

- Introduction to Amazon ECS (EC2 Container Service)

- Creating a Scalable ECS Cluster

- ECS Service Autoscaling

- High-Availability for ECS

- Pricing Comparison

- Use Cases Comparison

- Anti-Patterns for Lambdas

- A True Story of AWS Lambda Costs Shooting Through the Roof

Introduction to AWS Lambda

The Lambda service underpins much of the automation capabilities provided by AWS. Lambdas can be used for everything from processing web requests via API Gateway, transforming data in Kinesis streams, event notification processing from event sources such as S3, to being a designated CRON-like triggering service.

To write a simple Lambda function that doesn’t use non-standard packages, you can write your Lambda function right in the AWS console. For more advanced Lambda functions that use third-party libraries, you’ll need to build a Lambda deployment package, which require a certain file structure to work.

I’ll cover how to build Lambda packages in another post, but for now, here’s a link to AWS’s official docs page on the topic.

Supported Languages:

At the time of this article, AWS Lambda supports the following languages and versions:

- Python:

- 2.7

- 3.6

- Go 1.X

- Java 8

- Node.js:

- 4.3

- 6.10

- 8.10

- C#:

- .NET Core 1.0

- .NET Core 2.0

General AWS Lambda Tips

- If your code opens a connection to a remote database, in an effort to re-use connections, it’s best to construct your connection object outside of the scope of your function.

- Inside your handler, you can check to see if the connection is open and re-open if necessary

- Lambda functions are run inside Docker containers, and most of the time, containers are re-used

- Configure your Lambda function to use an SQS Dead Letter Queue (DLQ) so your Lambda doesn’t churn on bad payloads

- Configure alerting to notify your team when the length of the DLQ exceeds a certain threshold. Enables proactive remediation of issues

- Consider writing a function in your Lambda package that synthesizes an example ‘event’ which matches the object your function is to handle. In my experience, this makes it easier to test your function out locally during the initial phases, resulting in faster development

- AWS provides documentation pages that detail example event structures that you can use. Here’s an example page for S3 PUT events.

#!/usr/bin/python # Example synthesize event function import json localDev = True def synthesize_s3_event(): test_event = ''' { "Records":[ { "eventVersion":"2.0", "eventSource":"aws:s3", "awsRegion":"us-east-1", "eventTime":"1970-01-01T00:00:00.000Z", "eventName":"ObjectCreated:Put", "userIdentity":{ "principalId":"yourPrincipalId" }, "requestParameters":{ "sourceIPAddress":"127.0.0.1" }, "responseElements":{ "x-amz-request-id":"C3D13FE58DE4C810", "x-amz-id-2":"FMyUVURIY8/IgAtTv8xRjskZQpcIZ9KG4V5Wp6S7S/JRWeUWerMUE5JgHvANOjpD" }, "s3":{ "s3SchemaVersion":"1.0", "configurationId":"testConfigRule", "bucket":{ "name":"mybucket", "ownerIdentity":{ "principalId":"ExamplePrincipalID" }, "arn":"arn:aws:s3:::my-s3-bucket" }, "object":{ "key":"SomeFile.jpg", "size":1024, "eTag":"d41d8cd98f00b204e9800998ecf8427e", "versionId":"096fKKXTRTtl3on89fVO.nfljtsv6qko", "sequencer":"0055AED6DCD90281E5" } } } ] } ''' return json.loads(test_event) # function that AWS calls def lambda_handler(event, context): print('Lambda is fun!') if localDev == True: event = synthesize_s3_event() lambda_handler(event, None)

- AWS provides documentation pages that detail example event structures that you can use. Here’s an example page for S3 PUT events.

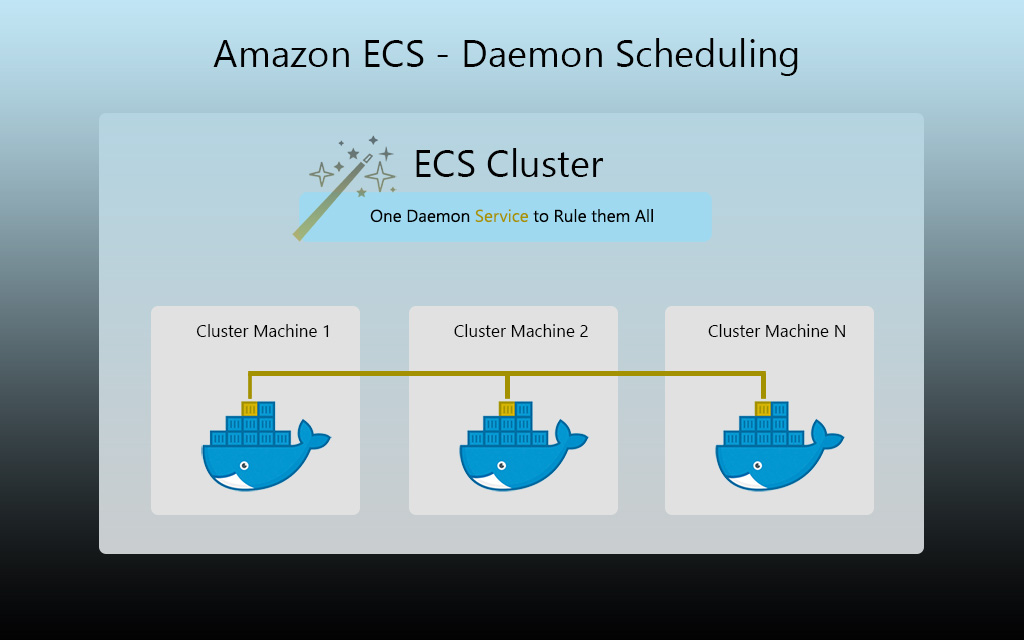

Introduction to Amazon ECS (EC2 Container Service)

ECS is AWS’s Docker container orchestration platform. Amazon provides a Docker registry service as well called the EC2 Container Registry, or ECR for short. Your build processes can push tags/images to ECR just as you can with Docker Hub.

In ECS, a collection of tightly coupled containers are typically defined together in what is called a task definition. An instance of the Docker images specified in your task definition is referred to as a task. In this task definition, you can define how much memory and CPU your containers can use. You may set a soft limit on memory, which allows the container to use as much memory as it needs, or a hard limit if you want to prevent your containers from running away with the underlying system’s memory.

Where do these containers run anyway?

An ECS service’s containers are scheduled in the ECS cluster you specify during creation. Standard ECS clusters are comprised of a group of EC2 instances that, upon boot up, register with the appropriate ECS cluster you configure them for.

Scaling Story for ECS Cluster

Typically, folks configure an Autoscaling Group for the EC2 instances that underpin their ECS cluster. The usual scale-up/scale-down metric is either based on CPU or memory ‘reservation’ utilization. This is kind of a foreign concept at first, but it’s actually pretty simple.

Demystifying Memory/CPU Reservation

Let’s say you have a single EC2 instance in your cluster with 4GB of memory, and there’s one running container in it with a memory limit of 2GB set in the task definition. The memory reservation utilization for the cluster would be ~50%. You wouldn’t get the full 4GB of memory, since AWS reserves a certain amount of system resources for other processes, but for the sake of example, the above should be accurate enough.

High-Availability for ECS

For HA purposes, I’d like to recommend that you use multiple availability zones to host your mission-critical ECS clusters. This is configured at the Autoscaling group level.

Why?

If one data center/AZ runs into a service issue, having the ability to fail your tasks over to another availability zone is important. I’d also suggest that you configure your ECS services to use the spread(availabilityZone) task placement strategy to ensure that your service maintains availability. This will ensure that instances of your containers are running in each AZ.

ECS Service Autoscaling

Just like with EC2 Autoscaling Groups, you can use CloudWatch Alarms to trigger your ECS service’s autoscaling policies. Being able to autoscale based on SQS queues, custom metrics or other data-sources, coupled with the benefits of container provisioning agility, enables us to build very powerful queue workers with ease.

Pricing Comparison

Here is a quick pricing comparison between Lambda and ECS.

AWS Lambda Pricing Dimensions:

- By Request Count

- Free: 1 Million Invocations Per Month

- $0.20 Per Million Beyond 1st Free Million Invocations

- By Duration (GB/sec)

- Free: 400,000 GB-sec per month (confused yet?)

- Formula: GB/sec = (Configured Lambda Function Max Memory Amount in GB) X (Duration of the Lambda function in seconds (rounded up to nearest 100ms))

For more information, check out the Lambda Pricing page.

Amazon ECS Pricing:

As far as a bare-bones ECS cluster without CloudWatch alarms or logs, you’ll be looking at:

- EC2 instances

- (Web services) application load balancers to proxy web requests to your containers

- EBS volume storage

- Network bandwidth out of the cluster’s AWS Availability Zone

All things considered, the cost isn’t too bad when considering how much an equivalent Lambda can cost your company when applied in a sub-optimal use case.

Use Cases Comparison

Both Amazon’s ECS and Lambda service are very powerful, but not equally as financially feasible in the same circumstances. Below you’ll find a list of some general guidelines on discerning between the two:

Ideal Use Cases for AWS Lambda

- Short duration processes that are invoked infrequently-some what frequently

- Processing S3 uploads or other events, or binding to CloudWatch log groups

- CloudWatch Logs batches up messages and passes a collection of logs to your Lambda, which keeps your invocation count lower

- Moderately active API gateway endpoints that process requests quickly and don’t require much memory

- Transforming data passing through a Kinesis Stream or Firehose

- Replacing CRON processes that simply call a route on an API endpoint of a web service

- Accomplished via CloudWatch Event Rules that trigger a Lambda function

- Allowing processes outside of AWS to securely make calls to your private VPC resources

- For example, allowing triggering of remote Jenkins builds by resource outside of AWS

- Minimizes your attack surface by removing the necessity for a VPN tunnel or to make your Jenkins server publicly accessible

Uses Cases for ECS Over AWS Lambda

Here’s a list of scenarios where implementing an ECS service might be more ideal for your application.

- Hundreds of thousands of requests per day+: from a cost perspective, especially with a duration >=1s

- Long-running or memory-intensive processes

- Reaching out to third-party web services in high request volume scenarios

- Consider what might happen if that external API slows down. 100,000 invocations that normally take 500ms to execute could now be taking 5-seconds to run. Check out the story below for a real-world example of Lambda burning through cash

- Tip: Set a restrictive but reasonable timeout duration. Set up alerting for the Invocation Errors CloudWatch metric to keep an eye on when timeouts occur

A short story of my personal experiences with the cost of AWS Lambda:

At one of my previous employers, one of the architects decided to leverage Lambda functions to perform complex processing of objects entering a high-volume Kinesis stream. In the test environments, everything worked fine. Even in production for a while, the cost was manageable.

One month, the Lambda function started taking much longer to execute. This flew under the team’s radar until the end of the month.

We received a massive bill from AWS for Lambda costs; somewhere in the $200k-$300k range. After the development team dug deeper, it was determined that a bug in a recent deployment was causing the function to take much longer than expected. The bug was fixed, but the bill was still much more than expected.

After hearing about this scenario, I suggested to the architect that we use an ECS worker instead to process some of the request’s logic asynchronously. Part of the logic involved reaching out to a third-party web service, which wasn’t known for its expeditious response times.

The process was revised very quickly thereafter, and we cut our costs dramatically. If I recall correctly, all things considered, the ECS infrastructure only cost us ~$1,000 a month.

Moral of the story:

We should carefully consider the of volume of requests, processing time and the nature of the payloads, and the interactions our apps have with external dependencies, especially outside of AWS. If the requests can be processed asynchronously, I’d recommend an ECS worker service. If the requests must be processed synchronously, a lightweight Docker image running in ECS behind an application load balancer would be ideal.

At a certain point, paying for EC2 instances is well worth it, especially with ECS. An elastic cluster of ECS container instances can be used to run multiple applications. We no longer need to put a single application on individual EC2s, which helps us with cost quite a bit, especially for ephemeral tasks such as queue workers that scale in and out throughout the course of the business day.

One of the important facets of architecting applications is choosing the right tool for the job.

I hope you’ve found this article informative, and I’d like to invite you to check out our product, Clouductivity Navigator – a Chrome Extension to improve your productivity in AWS. It helps you get to the documentation and AWS service pages you need, without clicking through the console. Download your free trial today!

-- About the Author

About the Author

Marcus Bastian is a Senior Site Reliability Engineer that works with AWS and Google Cloud customers to implement, automate, and improve the performance of their applications.

Find his LinkedIn here.